Published: December 10, 2025

-

Business teams want copilots now.

-

Vendors keep shipping “AI-native” features.

-

Your current data platform is already straining under dashboards, ETL, and compliance demands.

-

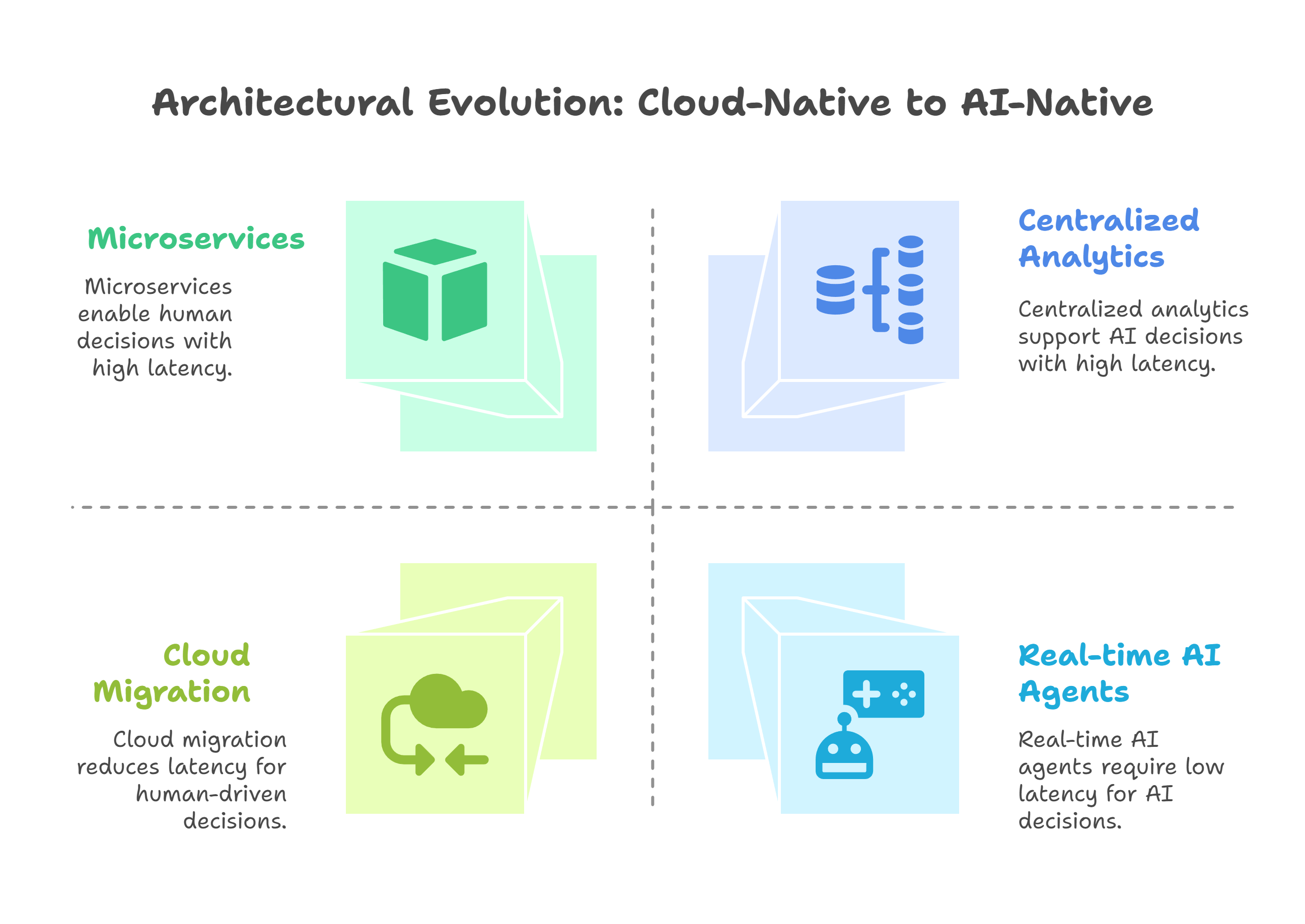

Move to the cloud

-

Break monoliths into microservices

-

Centralize analytics in a warehouse or lakehouse

- Decisions increasingly made or assisted by models and agents

- Context lives not only in tables, but in events, embeddings, and unstructured content

- Latency expectations drop from hours to seconds

In other words, AI can’t just be “another workload” on yesterday’s architecture. It needs an architecture designed with AI as a first-class citizen.

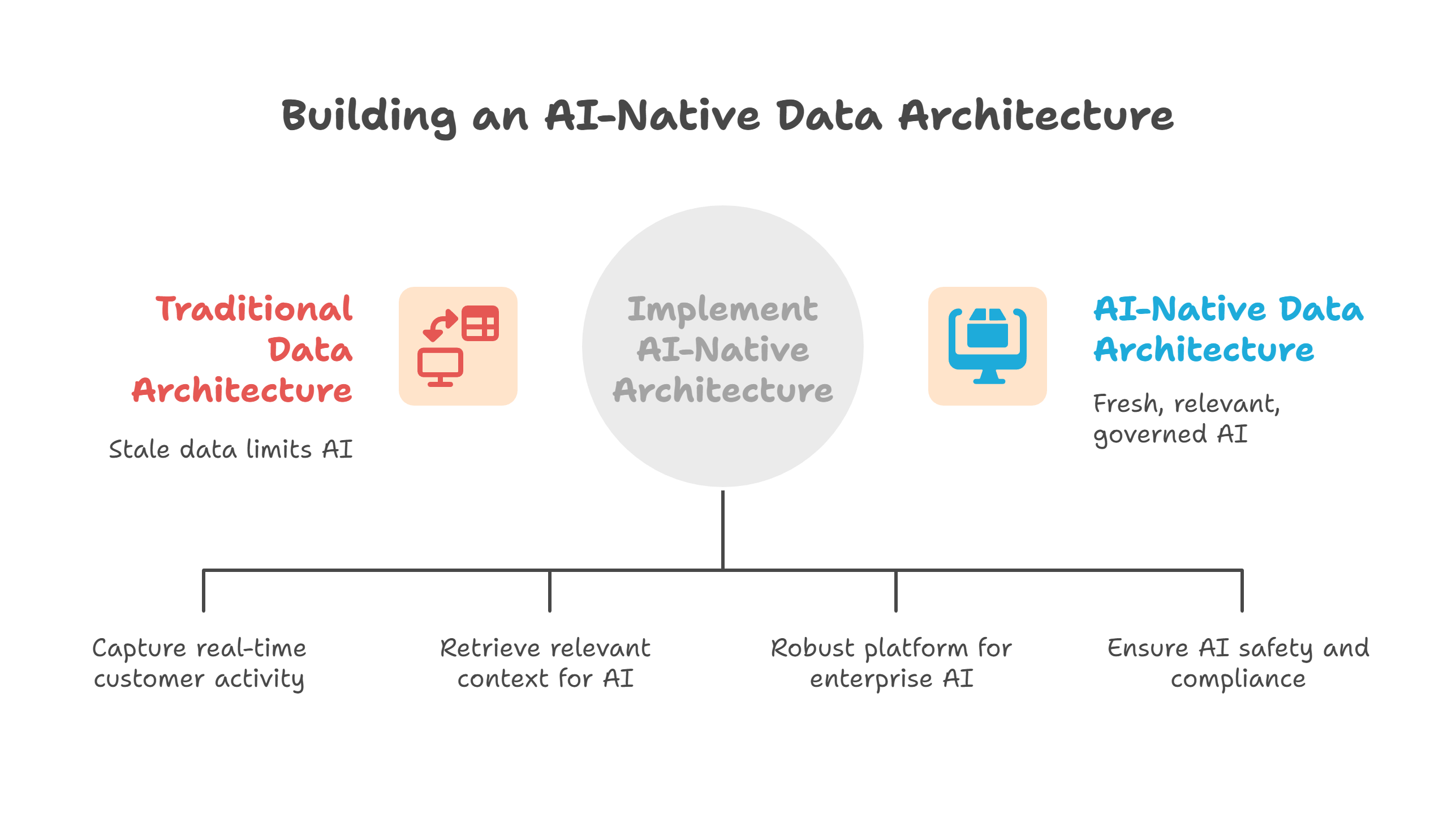

What is an AI-native data architecture (in plain terms)?

-

Event-driven at its core– business activities show up as streams (orders, logins, transactions, interactions), not once-a-day batches.

-

Vector-aware by design– you treat embeddings and semantic search as standard, not exotic.

-

LLM-ready data platform– data is structured, cleaned, and governed so LLMs and agents can safely use it.

-

Cloud- and SaaS-composable– you orchestrate services across Databricks, Snowflake, BigQuery, Salesforce, Informatica, Azure, and AWS as one platform, not silos.

_%20-%20visual%20selection%20(1).png?width=2829&height=1381&name=What%20is%20an%20AI-native%20data%20architecture%20(in%20plain%20terms)_%20-%20visual%20selection%20(1).png)

If your current platform makes it painful to answer questions like: “Show me similar customer cases from the last 12 months and recommend the next best action—right now, inside Salesforce.” …then you’re probably not AI-native yet.

The problem

- ETL into a cloud data warehouse

- BI dashboards for sales, operations, and finance

- CRM in Salesforce, data integration via Informatica

- Data lake + some Databricks notebooks on top

Then leadership asked for:

- A customer-service copilot that could summarize cases and suggest resolutions

- Real-time fraud and risk alerts using behavioral signals

- Proactive “next best offer” recommendations across channels

On paper, they had the tools. In practice:

- Data was mostly batch, not event-driven

- Content was scattered: tickets in one system, emails in another, docs in a shared drive

- No vector layer for semantic search

- Governance wasn’t ready for prompts, embeddings, or agent behavior

So every AI proof-of-concept became a fragile, one-off integration. It worked in demo, struggled in production.

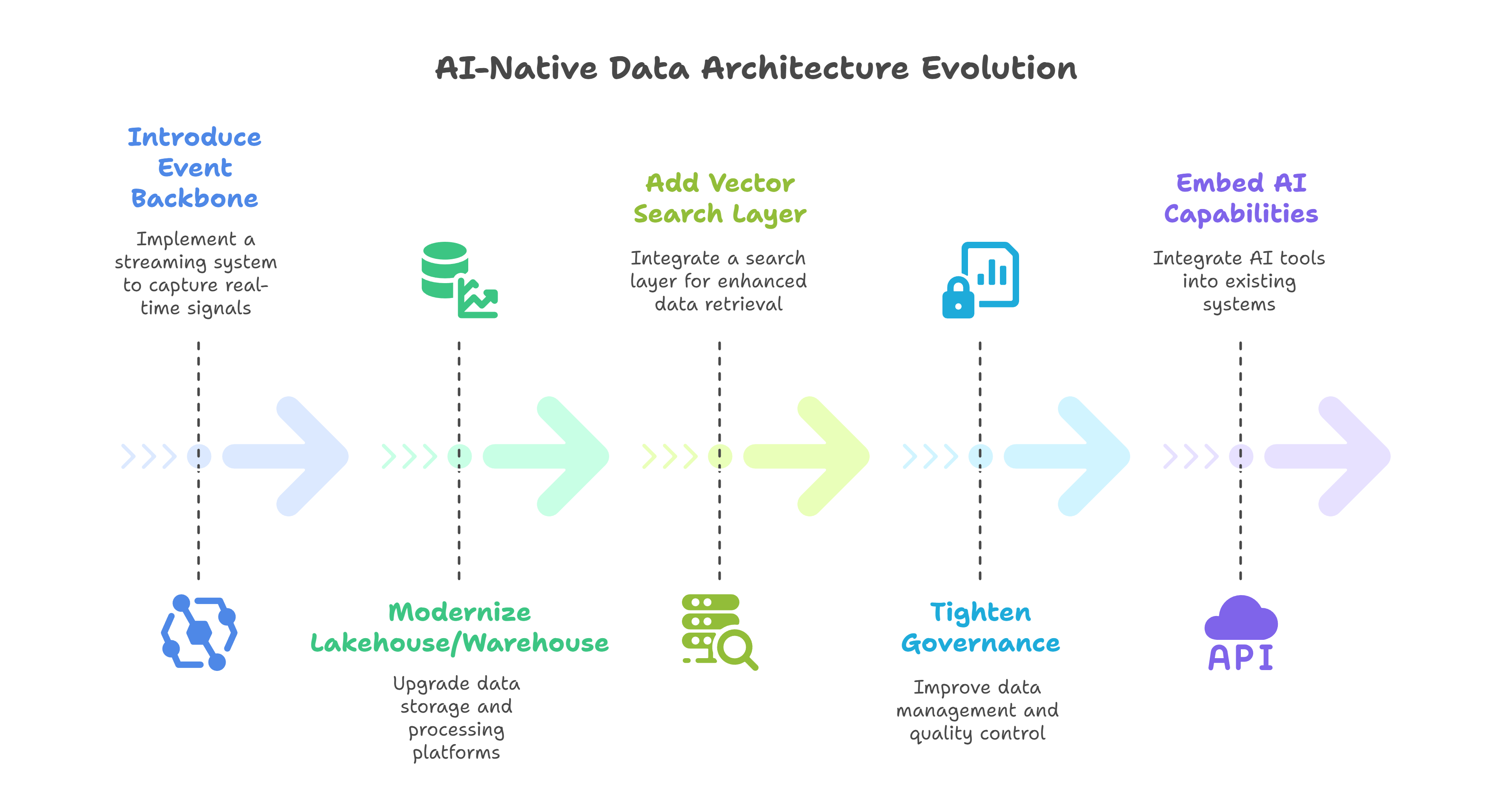

2. Modernized their lakehouse/warehouse on Snowflake and Databricks for unified analytics and ML.

3. Added a vector search layer integrated with their analytical platform.

4. Tightened governance, quality, and lineage with Informatica + cloud-native tools.

5. Embedded AI capabilities directly into Salesforce and internal portals so users never had to “go to the model.”

Within months, they were rolling out reusable AI building blocks instead of bespoke proofs-of-concept.

That shift—from project to platform—is the essence of AI-native architecture.

1. Event-driven data architecture

- Customer activity: logins, clicks, calls, purchases

- Operational events: shipments, delays, outages

- System health: logs, metrics, anomalies

Tools and patterns:

- Cloud-native streaming (e.g., Kinesis, Event Hubs, Pub/Sub) or Kafka

- Salesforce event streams for CRM and engagement data

- Change Data Capture (CDC) from transactional systems

Why it matters: LLMs and agents can make better decisions when they see the latest state, not yesterday’s snapshot.

-

Embedding pipelines (Databricks, AWS, Azure ML, etc.) to convert text into vectors

-

A vector store integrated with your lakehouse or warehouse (e.g., Snowflake’s or Databricks’ vector capabilities, BigQuery’s vector and semantic functions)

-

RAG (retrieval-augmented generation) patterns so LLMs use your data, not just what they were pre-trained on

Use cases:

-

Knowledge-aware chatbots for support

-

Semantic search across policies, contracts, or SOPs

-

Developer copilots aware of internal code and standards

3. A unified, LLM-ready data platform

- Lakehouse/warehouse on Databricks, Snowflake, or BigQuery

- Integration and quality pipelines via Informatica, cloud-native ETL/ELT, and APIs

- A consistent semantic layer and business definitions

AI-native design principles:

-

Model data around domains and events, not just tables

-

Prioritize freshness, completeness, and trust for the domains that feed AI use cases

-

Track lineage so you always know where AI is getting its answers from

4. Governance, risk, and control for AI

-

Access controls for sensitive data used in prompts and RAG

-

Policies for what AI agents can and can’t do (e.g., read-only vs. transactional actions)

-

Monitoring for data drift, prompt injections, and misuse

-

Audit trails for who used which models and data, and when

Why it matters: Regulators, customers, and internal stakeholders will ask: “How do you know this AI is safe, compliant, and explainable?” You need answers built into the architecture.

A practical runbook: how to get started (without boiling the ocean)

- e.g., support copilot, collections prioritization, real-time risk scoring.

- Align with specific KPIs (savings, revenue, CSAT, cycle time).

- What events, tables, and docs are needed?

- Where do they live today (Salesforce, ERP, data warehouse, data lake)?

- Stream key changes instead of waiting for batch loads.

- Standardize event schemas so multiple teams can consume them.

- Choose where vectors will live (Databricks, Snowflake, BigQuery, or a managed vector store).

- Build one or two RAG-based services that multiple apps can call.

- Classify sensitive data used by LLMs.

- Define policies for who can use which models and datasets.

- Ensure logging, monitoring, and auditability are in place.

-

CI/CD for data pipelines and models.

-

Alerting on data quality, pipeline failures, and model performance.

-

Clear ownership: data product owners + AI platform team.

%20-%20visual%20selection%20(2).png?width=2016&height=1054&name=A%20practical%20runbook_%20how%20to%20get%20started%20(without%20boiling%20the%20ocean)%20-%20visual%20selection%20(2).png)

You do not have to rebuild everything. Start slice-by-slice, with the use cases that matter most.

As an IT or data leader, you’ll need more than anecdotes. Track KPIs like:

-

-

From idea to live in production.

-

Goal: weeks, not quarters.

2. Real-time coverage-

% of key AI decisions using event-driven data instead of batch.

3. Retrieval and response quality-

User feedback scores for AI-powered experiences

-

Accuracy / relevance of responses from RAG-based systems.

4. Platform reuse-

How many use cases reuse the same embeddings, events, and data products?

-

Higher reuse = less technical debt, faster delivery.

5. Business outcome per use case-

Revenue lift, cost reduction, or productivity gain per AI capability.

-

Ties architecture to the language your CFO cares about.

-

Even strong teams stumble on similar issues:

1. Treating AI as a bolt-on

-

Duplicate pipelines

-

Inconsistent answers

-

Nightmarish governance

2. Ignoring events and vectors

-

Tables in a warehouse

-

A couple of nightly jobs

-

A standalone chatbot

Fix: Plan explicitly for event streaming and vector search as architectural pillars, not nice-to-haves.

3. Scattered ownership

-

Competing standards

-

One-off choices for vector stores

-

Conflicting governance rules

Fix: Create a joint AI Platform & Data Architecture Council (CDO, CIO, head of data engineering, security, and key business leaders).

4. Over-focusing on models, under-focusing on plumbing

-

Data contracts

-

Lineage

-

Reliability

Fix: Treat models as consumers of your architecture. If you get events, vectors, and governance right, you can swap or upgrade models with far less friction.

%20-%20visual%20selection%20(1).png?width=2196&height=1244&name=Common%20pitfalls%20(and%20how%20to%20avoid%20them)%20-%20visual%20selection%20(1).png) Who should lean into this now?

Who should lean into this now?-

Lead IT, enterprise architecture, or data platforms in a mid-to-large North American enterprise

-

Run a hybrid stack spanning Salesforce, Informatica, Snowflake/Databricks/BigQuery, Azure, or AWS

-

Are under pressure to deliver LLM-enabled features, agents, and copilots that actually reach production

If that sounds like you, AI-native data architecture isn’t just a trend—it’s your next operating requirement.

Where to go from here

-

Pick one or two customer-facing AI use cases

-

Map how your current architecture helps—or blocks—them

-

Design a target AI-native blueprint that reuses your existing platforms (Informatica, Salesforce, Databricks, Snowflake, BigQuery, Azure, AWS) rather than replacing them

If you’d like a working session to sketch that blueprint and turn it into a concrete roadmap, the Pacific Data Integrators team can help you connect the dots between strategy, architecture, and delivery.

👉 Ready to explore what an AI-native data architecture could look like for your organization? Request a working session or demo: Click Here

FAQs

Blog Post by PDI Marketing Team

Pacific Data Integrators Offers Unique Data Solutions Leveraging AI/ML, Large Language Models (Open AI: GPT-4, Meta: Llama2, Databricks: Dolly), Cloud, Data Management and Analytics Technologies, Helping Leading Organizations Solve Their Critical Business Challenges, Drive Data Driven Insights, Improve Decision-Making, and Achieve Business Objectives.