- 9:00 – “Can we turn on this new AI feature in Salesforce?”

- 10:30 – “Legal wants to talk about AI risk.”

- 1:00 – “Board update: where are we on generative AI?”

Somewhere between the excitement and the pressure, one theme keeps surfacing:

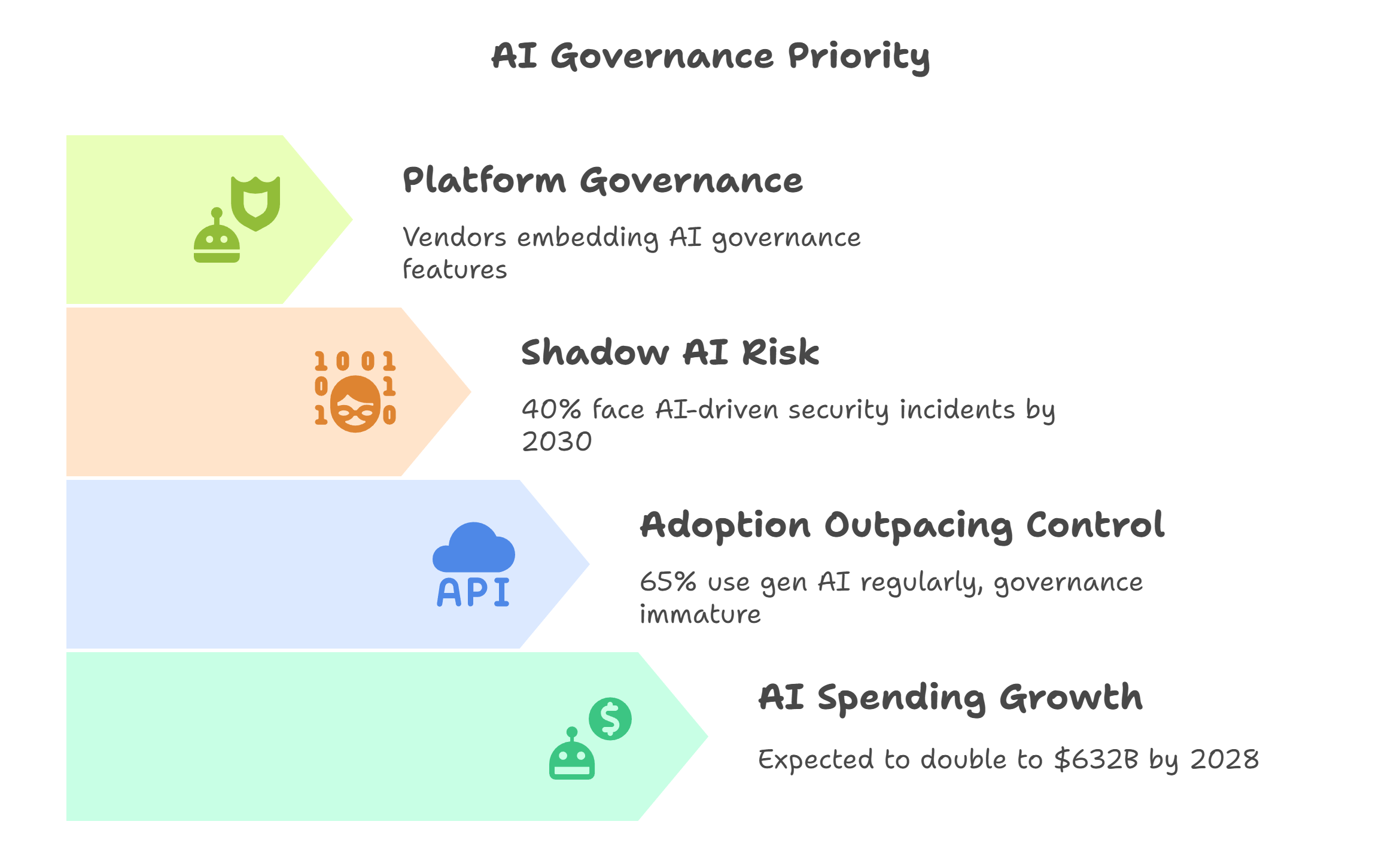

McKinsey’s 2025 State of AI survey reports that 65% of organizations now regularly use generative AI, nearly double in just over a year. At the same time, Gartner predicts that by 2030, more than 40% of global organizations will suffer security or compliance incidents due to unauthorized “shadow AI” tools. (businessabc.net)

So the question isn’t “Are we using AI?” anymore. It’s: Can we scale LLMs and AI agents without losing control of our data, our brand, or our risk posture?

This blog is your practical guide to answering “yes.”

Picture a North America–based CIO at a global financial services firm.

Over 12 months, her teams quietly rolled out:

- A gen AI assistant for developers

- A support copilot pulling knowledge from Salesforce

- A pilot of AI agents that draft outreach campaigns

The results were promising: faster responses, fewer manual tasks, happier teams.

Then three things happened in the same quarter:

She needed AI governance that matched the scale of AI adoption.

What AI governance really means (beyond slideware)

Analysts like Informatica define AI governance as the policies, processes, and systems that make AI accountable, transparent, fair, and compliant across its lifecycle. (Informatica)

– Tools, models, SaaS features, agents, and home-grown apps.

2. What data is it touching?

– PII, contracts, CRM records, logs, and knowledge bases.

3. Who is accountable?

– For outcomes, incidents, approvals, and ongoing monitoring.

4. What guardrails are in place?

– Policies and technical controls that are enforced by design.

%20-%20visual%20selection.png?width=2412&height=1403&name=What%20AI%20governance%20really%20means%20(beyond%20slideware)%20-%20visual%20selection.png)

Think of it as moving from “don’t do anything risky” to “here’s how we do AI safely, repeatedly, and at scale.”

-

Informatica is embedding data and AI governance into its Intelligent Data Management Cloud (IDMC), helping organizations manage bias, security, compliance, and transparency as part of AI lifecycles. (Informatica Knowledge)

-

Salesforce is investing heavily in its Einstein Trust Layer, a set of guardrails (dynamic grounding, zero data retention, data masking, toxicity detection) designed to keep generative AI secure and compliant across its CRM ecosystem. (Salesforce)

The message to CIOs is clear: If AI is strategic, AI governance needs an owner—and that owner is increasingly you.

-

Read and write to systems like Salesforce and other line-of-business apps

-

Act on behalf of humans in workflows (e.g., drafting emails, updating opportunities, generating knowledge articles)

-

Pull context from multiple sources (data lakes, CRMs, knowledge bases, logs)

-

Learn from prompts, examples, and feedback

That introduces new types of risk:

-

Data leakage– Sensitive customer or deal information ends up in a place it shouldn’t.

-

Wrong-but-confident outputs– LLMs hallucinate, but the UI looks authoritative.

-

Autonomous actions– Agents make updates or decisions that bypass standard controls.

-

Compliance blind spots– Regulatory requirements (privacy, audit, sector rules) lag implementations.

Governance is how you turn these from blockers into design constraints.

Use this as a starting framework and adapt it to your context.

.png?width=2016&height=1079&name=A%20CIO%E2%80%99s%20runbook%20for%20AI%20governance%20in%20the%20LLM%20+%20agent%20era%20-%20visual%20selection%20(1).png)

-

Inventory approved AI: LLM-based features in Salesforce, AI-enabled workflows, internal copilots, and models deployed in your data platforms.

-

Capture unapproved or informal usage via surveys, logs, and security tools—where are employees turning when official tools don’t exist?

Outcome: A living register of AI tools, use cases, systems touched, and data categories.

- “No sensitive data in external models without approved controls.”

- “Humans remain accountable for high-impact decisions.”

- “Every AI use case has a named business owner and technical owner.”

Translate those principles into lightweight policies and approval paths, not 80-page PDFs nobody reads.

-

Use tools like Informatica to classify and protect data that might feed AI (prompts, RAG, training, fine-tuning). (Informatica)

-

Define which domains are “AI-eligible” and under what conditions (e.g., only anonymized, masked, or aggregated).

-

Ensure your Salesforce data respects the same rules when used for AI features—this is where Salesforce’s Trust Layer can enforce policies at the platform level. (Salesforce)

4. Create a model & tool approval lane

-

Maintain an approved AI stack: models, SaaS features, and in-house services.

-

Define when teams must do a risk assessment (e.g., customer-facing, financial, regulated).

-

Provide reference architectures for common patterns: RAG, summarization, agent-based workflows.

The goal: make it easier to do AI the right way than to roll your own in the shadows.

-

Use Salesforce Einstein Trust Layer to control what data flows to LLMs and to log interactions for audit and troubleshooting. (Salesforce)

-

Use Informatica IDMC and associated governance capabilities to ensure the data pipeline into AI is high-quality, documented, and policy-compliant. (Informatica Knowledge)

-

Add central logging and monitoring for prompts, responses, and downstream actions (especially agents that can write back to systems).

6. Measure, iterate, and communicate

- Review AI incidents, usage, and ROI quarterly with an AI governance council.

- Update your register of tools and use cases regularly.

- Communicate decisions clearly: what’s allowed, what’s not, and why.

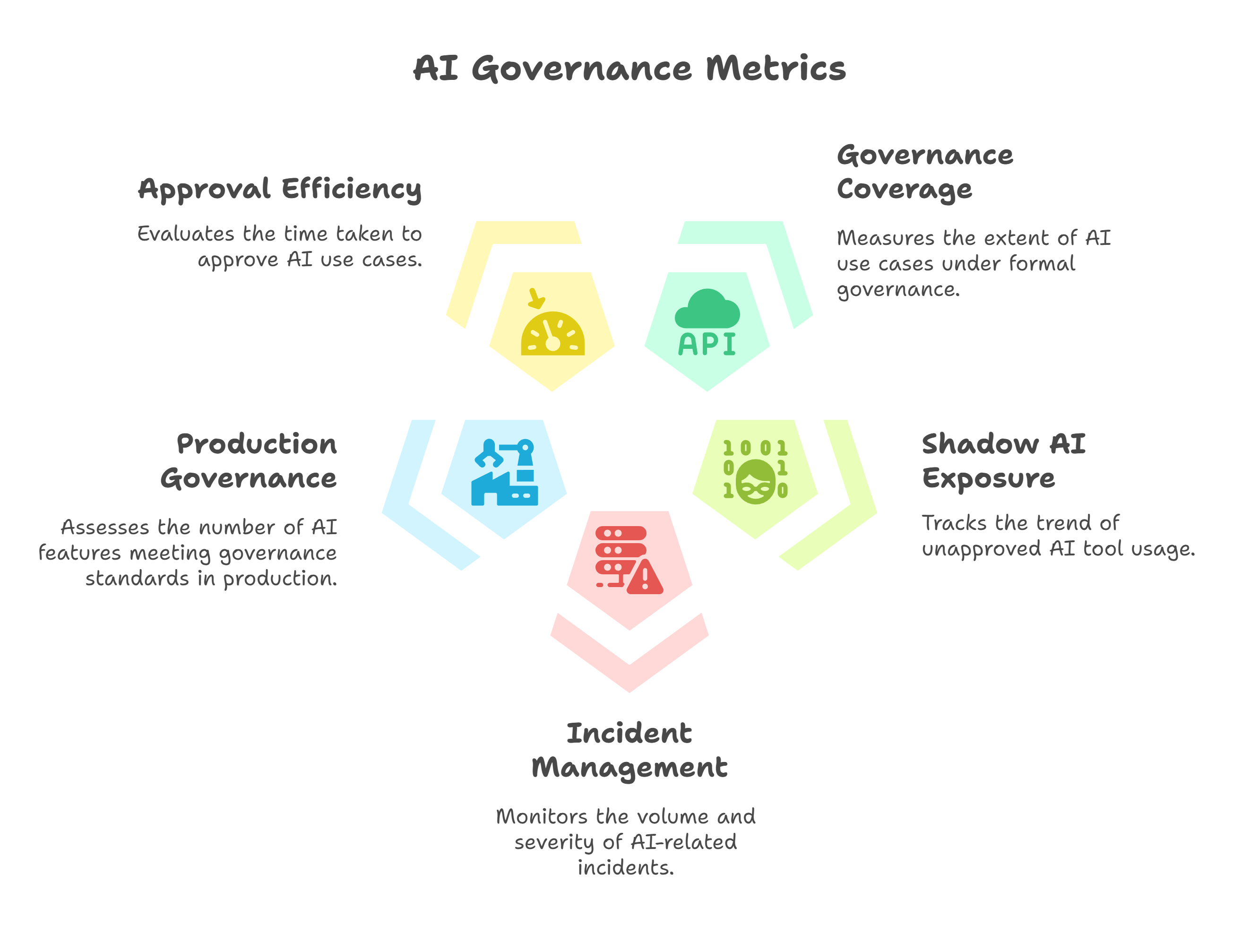

KPIs that show whether your AI governance is working

- % of AI use cases under governance

- Number of registered, approved AI use cases/total discovered use cases.

- Shadow AI exposure trend

- Change in unapproved AI tool usage over time (from surveys, CASB, or network insights).

- AI incident volume & severity

- AI-related security, privacy, or compliance incidents per quarter, plus mean time to detect and resolve.

- Governed AI in production

- Number of AI features and agents in production that meet governance standards (not just pilots), and their associated business impact.

- Time-to-approve AI use cases

- Average cycle time from request → approved → first release.

- If this is too slow, people will route around governance.

Common pitfalls (and how to avoid them)

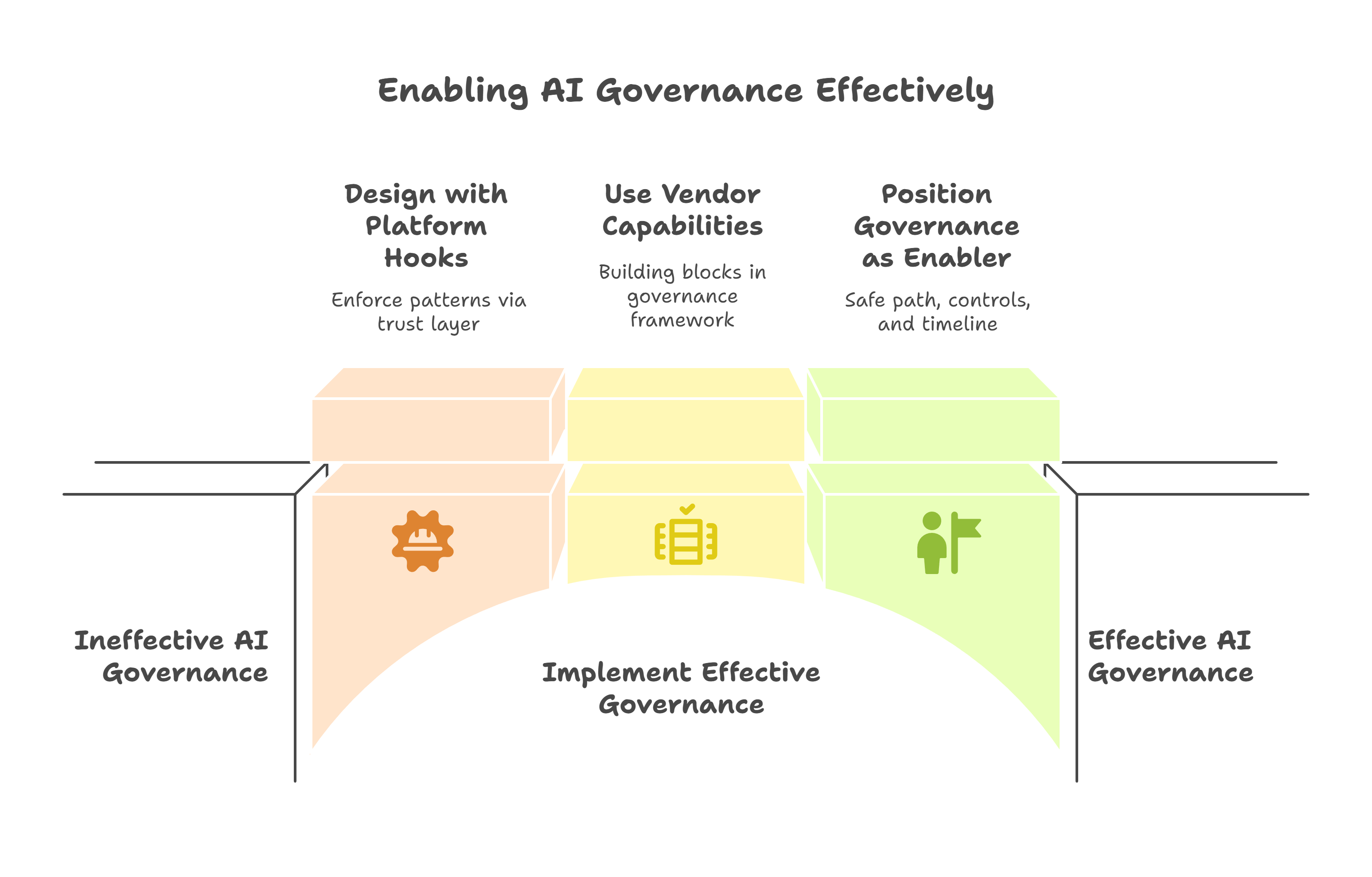

- Compliance theater

- Frustrated teams

- Shadow AI workarounds

Try this instead:

Design governance with platform hooks from day one—for example, enforce patterns in Salesforce via the Trust Layer, and in data pipelines via Informatica’s governance tools, so compliance happens by default. (Informatica Knowledge)

Pitfall 2: Over-focusing on one vendor

No single tool will “do AI governance for you.” Your environment spans CRM, data platforms, integration layers, and custom apps.

Try this instead:

Use vendor capabilities as building blocks in an overall governance framework:

- Salesforce = engagement + trust layer

- Informatica = data and AI governance backbone

- Your own platforms = integration of logs, policies, and controls

Pitfall 3: Saying “no” instead of “yes, and…”

If the default answer to AI ideas is “no” or “not yet,” business teams will:

- Move to unsanctioned tools

- See IT as a blocker, not a partner

Try this instead:

Position governance as the enabler: “Yes, we can do that—here’s the path, controls, and timeline that make it safe.”

Who needs to lean in right now?

This conversation is especially urgent for:

-

CIOs and heads of IT who are accountable for AI risk but don’t yet have a clear governance framework.

-

CDOs and data leaders who must extend data governance into LLMs, RAG, and agents.

-

Technology leaders in North America whose boards or regulators are already asking AI questions.

If your organization is piloting copilots and agents in tools like Salesforce, or building on top of governed data platforms like Informatica, this is your window to align innovation with control—before scale makes it harder.

Where to go from here

You don’t need a 50-page AI governance manifesto to get started. What you need is:

- A clear, CIO-owned AI governance framework

- A map of your current AI landscape (including shadow AI)

- A plan to embed guardrails into the platforms where work actually happens

If you’d like a structured way to do that—grounded in real enterprise platforms and not just theory—the Pacific Data Integrators team can help you assess your current posture and design a practical roadmap.

👉 Ready to explore what AI governance could look like for your organization as you scale LLMs and agents?

Request a working session or demo

Blog Post by PDI Marketing Team

Pacific Data Integrators Offers Unique Data Solutions Leveraging AI/ML, Large Language Models (Open AI: GPT-4, Meta: Llama2, Databricks: Dolly), Cloud, Data Management and Analytics Technologies, Helping Leading Organizations Solve Their Critical Business Challenges, Drive Data Driven Insights, Improve Decision-Making, and Achieve Business Objectives.

.jpeg?width=352&name=a-futuristic-and-innovative-digital-illustration-f--I_dc6bEQzyKGqbU3KBy2A-WS2WVvjJRO-vIAO69aoefQ%20(1).jpeg)