Published: January 7, 2026

Introduction

It’s 4:45 PM on a Friday. Your agentic AI pilot just went “production-light” for a customer-ops workflow: read customer context, check entitlement, and update a Salesforce case with the right priority and routing.

It’s 4:45 PM on a Friday. Your agentic AI pilot just went “production-light” for a customer-ops workflow: read customer context, check entitlement, and update a Salesforce case with the right priority and routing.

The model behaves perfectly in testing. Then the incident happens anyway.

Not because the model hallucinated—but because the agent pulled a stale entitlement record, matched the wrong duplicate account, and confidently triggered the wrong downstream automation.

That’s the uncomfortable truth IT leaders are running into in 2026: agentic AI turns data quality from a reporting issue into an operational reliability issue. Gartner has warned that organizations that don’t adapt for “AI-ready data” put AI efforts at risk—and even predicts many AI projects will be abandoned without it. (Gartner)

This blog is a practical guide to make agentic workflows safe and dependable with data quality management, data quality control, data quality assurance, and data control—without turning into a never-ending “clean all data” program.

Why agentic AI projects fail in production (it’s usually data)

Traditional analytics can tolerate “mostly correct” data. Agentic workflows can’t—because they act.

The three most common data-driven failure modes

1. Bad context in → confident action out

If the agent’s context is wrong (duplicates, stale status, missing fields), its action can be wrong at scale.

If the agent’s context is wrong (duplicates, stale status, missing fields), its action can be wrong at scale.

2. Silent drift

Pipelines still run, but the meaning changes: a status code gets repurposed, a mapping changes, a definition shifts (“active customer”), and the agent’s behavior degrades quietly.

Pipelines still run, but the meaning changes: a status code gets repurposed, a mapping changes, a definition shifts (“active customer”), and the agent’s behavior degrades quietly.

3. Blast radius amplification

A single defect in a Tier-0 dataset can propagate through: lakehouse/warehouse → agent → Salesforce → downstream workflows.

A single defect in a Tier-0 dataset can propagate through: lakehouse/warehouse → agent → Salesforce → downstream workflows.

The data quality dimensions that matter most for agentic workflows

You don’t need 40 metrics. Start with the ones that prevent wrong actions:

-

Freshness: Is data up to date enough to act?

-

Completeness: Are required fields present for action paths?

-

Uniqueness / deduplication: Is “one customer” truly one customer?

-

Validity: Do values match allowed formats/ranges/enums?

-

Consistency: Do systems agree on definitions and keys?

-

Lineage/provenance: Can you trace what the agent relied on?

Snowflake explicitly positions data metric functions (DMFs) to monitor integrity metrics like freshness and counts for duplicates/NULLs/rows/unique values. (Snowflake)

Problem

Impact

A simple story framework: problem → impact → solution

Problem

Your agent is “smart,” but your data estate is not consistently governed. CRM and warehouse definitions diverge. Duplicates exist. Freshness isn’t enforced. Exceptions aren’t operationalized.

Impact

-

Wrong updates in Salesforce

-

Incorrect routing/priority decisions

-

Customer-facing errors

-

Extra manual rework and loss of trust

-

Risk exposure (audits, compliance, SLAs)

Solution

Treat data quality as a reliability system for agentic AI:

-

Data quality management defines what “good” is and who owns it

-

Data quality control enforces it in the flow

-

Data quality assurance proves it stays true over time

-

Data control changes agent behavior when quality degrades

Mini runbook: how IT leaders make agentic AI reliable in 30–60 days

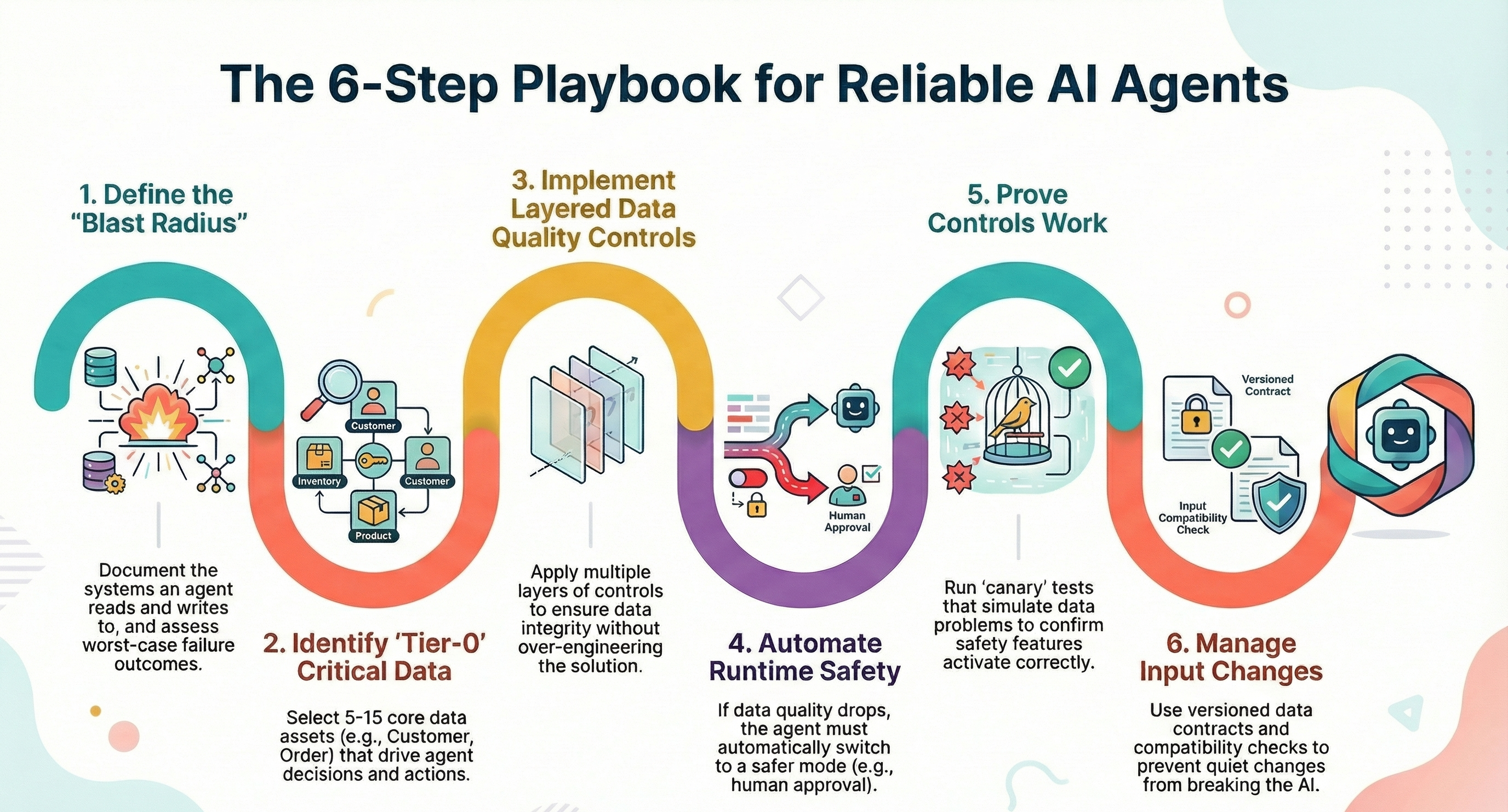

1) Define the “agent blast radius” first

For each agentic workflow, document:

-

What systems it reads (Snowflake/Databricks/BigQuery, catalogs, CRM)

-

What systems it writes (Salesforce, ticketing, workflow tools)

-

Worst-case failure outcomes (customer impact, financial loss, compliance)

Deliverable: Agent Risk Register (top 10 failure scenarios + owners)

2) Tier your data: pick Tier-0 “AI-critical” assets

Start with 5–15 assets that drive decisions and actions (typical examples):

-

Customer / Account / Contact

-

Entitlement / Contract

-

Case / Order / Product

-

Pricing / Inventory (if applicable)

Deliverable: Tier-0 Dataset List with SLOs and owners

3) Implement data quality control in three layers (fastest path to reliability)

This is where most teams either over-engineer—or under-operationalize. The sweet spot is layered controls:

Layer A: Pipeline gates (stop bad records early)

-

Databricks: Pipeline expectations apply quality constraints in ETL pipelines and can fail updates or drop invalid records. (Databricks)

-

AWS: AWS Glue Data Quality uses DQDL to define rules for data quality checks. (AWS)

-

Informatica (Cloud Data Quality / Profiling): Use rule specifications and profiling assets to encode reusable business rules and prevent invalid data from progressing downstream (especially in hybrid estates). (Informatica)

-

Salesforce: Use Duplicate Rules (with Matching Rules) to block or alert when duplicates are created—critical when agents read/write Accounts, Contacts, Leads, or Cases. (Salesforce)

Layer B: Continuous scanning & monitoring (detect drift)

-

BigQuery + Dataplex Universal Catalog: Data quality scans validate BigQuery tables against defined rules and log alerts when requirements aren’t met. (Google Cloud)

-

Snowflake: DMFs can be associated with tables/views to run regular data quality checks and monitor core integrity signals. (Snowflake)

-

Informatica: Scorecards and rule occurrences provide a way to measure and monitor data quality progress over time. (Informatica)

Layer C: Governance + remediation workflows (make quality operable)

-

Microsoft Purview Unified Catalog: Supports data quality rules/scans and creates actions when thresholds aren’t met (assignable and trackable). (Microsoft Learn)

4) Add “data control” to agent runtime behavior (the step most teams miss)

Monitoring isn’t safety unless it changes what agents do.

When Tier-0 health drops below threshold, your agent should automatically:

-

switch from Do → Suggest (human approval required)

-

go read-only for sensitive actions

-

route exceptions to a queue with context

-

log an incident: dataset, failed rule, lineage, impacted workflow

This is how you prevent “Friday 4:45 PM” incidents from becoming business events.

5) Make it real with data quality assurance (prove controls work)

Create a lightweight assurance loop:

-

Rule coverage on Tier-0 assets (% of Tier-0 with enforced rules)

-

Tests for schema + semantic rules

-

Canary runs that intentionally simulate drift and confirm the agent enters safe mode

6) Add change control + versioning for agent inputs

Agentic AI breaks when definitions change quietly. Add:

-

versioned data contracts for Tier-0 assets

-

deprecation windows

-

compatibility checks before releases

KPIs that matter to IT leaders (3–5 you can actually run)

1. Tier-0 Freshness SLO compliance (%)

2. Rule coverage on Tier-0 assets (%)

3. Defect escape rate (quality incidents reaching agent runtime)

4. MTTD / MTTR for agent-impacting data quality incidents

5. Safe-mode activations (how often autonomy is reduced due to data health)

%20-%20visual%20selection%20(2).png?width=2772&height=1133&name=KPIs%20that%20matter%20to%20IT%20leaders%20(3%E2%80%935%20you%20can%20actually%20run)%20-%20visual%20selection%20(2).png)

These KPIs turn “data quality” into something fundable: reliability, risk reduction, and faster scaling of automation.

Common pitfalls (and how to avoid them)

-

Monitoring without enforcement: dashboards don’t prevent bad actions—gates and safe modes do.

-

Rules built for reporting, not automation: agents need “safe to act” thresholds.

-

No ownership: if Tier-0 assets don’t have clear owners, the same defects repeat.

-

Quality isn’t part of incident response: alerts must create tickets/actions and have SLAs.

-

CRM truth diverges from warehouse truth: Salesforce objects often become Tier-0; duplicates and missing fields become agent failures. (Salesforce)

-

No semantic alignment: “active customer” meaning differs across domains; agents can’t infer semantics reliably—define and enforce them.

Analyst insights (proof without the fluff)

-

Gartner: warns that AI-ready data requirements differ from traditional data management and predicts widespread AI project abandonment without AI-ready data. (Gartner)

What this means: treat data quality management as a prerequisite for scaling agentic AI, not a cleanup task after pilots. -

McKinsey: recommends a structured, layered approach to adopting agentic systems safely and securely—starting with risks and governance and moving toward controls. (McKinsey & Company)

What this means: data control and quality controls are part of “secure agent deployment,” not just data engineering hygiene. -

Forrester: notes governance is entering an “agentic era,” shifting from compliance-only to enabling trust, agility, and AI readiness at scale. (Forrester)

What this means: governance and remediation workflows must be more automated to keep up with autonomous systems. -

IDC: frames AI governance as balancing innovation with risk management and highlights pillars such as transparency, security, compliance, and human-in-the-loop governance. (IDC)What this means: when data quality drops, human oversight and safe-mode behaviors should kick in by design.

Practical checklist

This week

-

Identify 1–2 agentic workflows and define blast radius

-

List Tier-0 datasets/objects they depend on

-

Pick 5 core rules per Tier-0 asset (freshness, completeness, uniqueness, validity, consistency)

Next 2–4 weeks

-

Add pipeline gates (Databricks expectations/AWS DQDL/Informatica rules/Salesforce duplicate rules) (Databricks)

-

Turn on continuous monitoring (Snowflake DMFs/BigQuery scans/Informatica scorecards) (Snowflake)

-

Define safe-mode behaviors and connect triggers

Next 30–60 days

-

Establish governance + remediation workflow (Purview actions, owners, SLAs) (Microsoft Learn)

-

Add change control + versioning for Tier-0 contracts

-

Track KPIs and expand to the next set of workflows

%20-%20visual%20selection%20(1).png?width=2877&height=1240&name=Practical%20checklist%20(copy_paste)%20-%20visual%20selection%20(1).png)

A Thoughtful Next Step: how Pacific Data Integrators can help

If you’re scaling agentic AI in production, the most practical next step is to turn data quality management into a reliability program: define Tier-0 assets, implement enforceable data quality control gates, prove data quality assurance coverage, and wire data control signals into agent behavior (approve/suggest/read-only) when quality drops.

Pacific Data Integrators can help you design and implement this end-to-end across Salesforce, Informatica, Snowflake, Databricks, BigQuery, AWS, and Azure—with a phased plan that delivers measurable reliability improvements without a “boil the ocean” cleanup effort.

Blog Post by PDI Marketing Team

Pacific Data Integrators Offers Unique Data Solutions Leveraging AI/ML, Large Language Models (Open AI: GPT-4, Meta: Llama2, Databricks: Dolly), Cloud, Data Management and Analytics Technologies, Helping Leading Organizations Solve Their Critical Business Challenges, Drive Data Driven Insights, Improve Decision-Making, and Achieve Business Objectives.